Two years after creating the Joint Artificial Intelligence Center to jumpstart AI development, the Defense Department is increasingly looking at how to train people to use that technology.

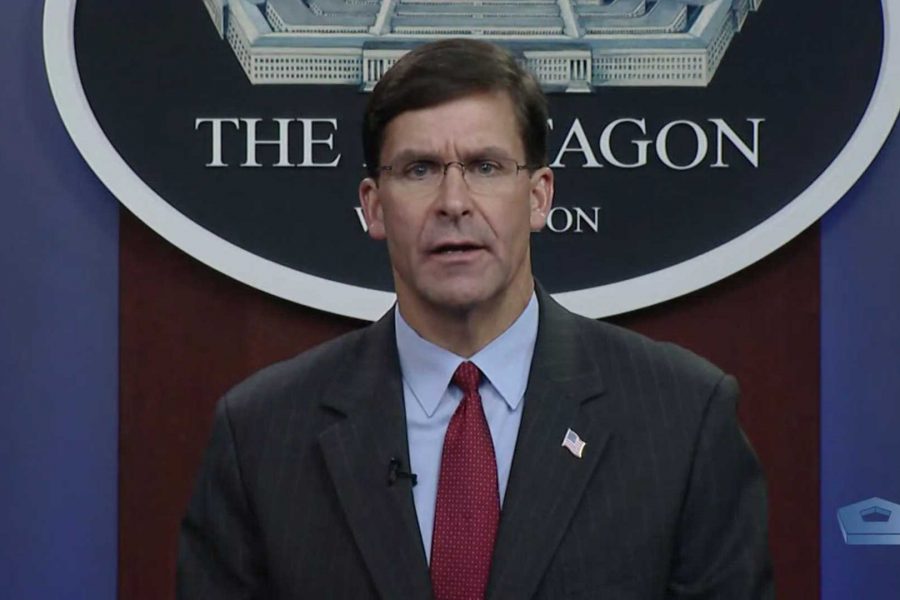

The Pentagon is working on an overarching strategy to educate all DOD personnel on how AI could factor into everything from business and human resources software to combat systems, Defense Secretary Mark T. Esper said Sept. 9 at an AI symposium run by the department. Tech experts often argue that getting people to trust algorithm-powered software is just as important as getting the algorithms and the data themselves right.

That will be particularly crucial as the Joint Staff prepares a new joint warfighting concept that heavily depends on AI to process information and connect the armed forces in new, faster ways. The plan for joint all-domain command and control will be ready in December, Vice Chairman of the Joint Chiefs of Staff Gen. John E. Hyten said.

While the armed forces have not yet broadly adapted AI, and are relying on commercial industry to drive those advancements, the promise of AI looms large. It could help service members understand what moves they could make in combat, crunch intelligence imagery more effectively, smartly maintain aircraft and other vehicles, and even task a wingman drone with surveillance or strike missions. The department wants to incorporate algorithms into weapons targeting and aircraft maneuvering software, and is working toward planes that can dogfight with other aircraft on their own.

The military maintains that AI will be used largely as a helper, not to power machines that wage war on their own without human intervention. DOD released a set of five ethical guidelines in February for AI deployment that is responsible, equitable, traceable, reliable, and governable.

“The decision to go into a conflict cannot be based on artificial intelligence, it has to be based on human intelligence and the human decision process,” Hyten said. In other words, AI can provide a better picture of what’s happening around us, and tell us how we might want to react, but the U.S. could not start a war without people making that decision to proceed.

DOD hopes that teaching people about ethical AI use now will let DOD put that technology into practice.

“Over the last six months … the department has stood up a Responsible AI Committee that brings together leaders from across the enterprise to foster a culture of AI ethics within their organizations,” Esper said. “In addition, the JAIC has launched the Responsible AI Champions program, a nine-week training course for DOD personnel directly involved in the AI delivery pipeline. We plan to scale this program to all DOD components over the coming year.”

The JAIC is starting a six-week pilot program next month to train more than 80 defense acquisition employees how to incorporate AI into their programs—a move that can help spread the technology’s adoption across the department instead of relying on software developers to lead the charge.

“With the support of Congress, the department plans to request additional funding for the services to grow this effort over time and deliver an AI-ready workforce to the American people,” Esper said.

The JAIC is also looking to build global consensus on responsible and ethical AI development, as the U.S. criticizes Russia and China’s pursuit of AI for combat as well as domestic surveillance.

While some officials like Hyten say the U.S. is doing enough to keep up with Russia and China, others inside DOD say it’s underestimating what those countries are capable of in AI. Nicolas M. Chaillan, the Air Force’s chief software officer, said at the Billington CyberSecurity Summit on Sept. 8 that competing nations can outpace the U.S. because they are willing to cut corners.

“China doesn’t care about ethics, and I can tell you, they’re not going to let that slow them down,” he said. “They’re going to do whatever it takes to have AI, machine learning capabilities that are going to disrupt what we do. We need to be careful, right? Not always worrying about what people would think, or ethics, when really, we need to be leading. I don’t think we have that luxury right now.”

Still, most leaders are eager to frame the U.S. as a moral AI user and other countries as a threat.

“The contrast between American leadership on AI and that of Beijing and Moscow couldn’t be clearer,” Esper said. “We are pioneering a vision for emerging technology that protects the U.S. Constitution and the sacred rights of all Americans. Abroad, we seek to promote the adoption of AI in a manner consistent with the values we share with our allies and partners: individual liberty, democracy, human rights, and respect for the rule of law.”

Next week, the JAIC will start a new AI Partnership for Defense initiative with defense organizations from more than 10 foreign countries to “focus on incorporating ethical principles into the AI delivery pipeline,” Esper said. He did not say who will be involved.